Imagine being able to understand every word spoken in your native tongue at a conference or meeting that is being held in a language that is unfamiliar to you. A system of such nature can instantly transcribe spoken utterances into text using sophisticated automatic speech recognition(ASR) and natural language processing (NLP) algorithms. It can also instantly convert this text into regional languages using advanced machine learning techniques. Language boundaries are broken like never before, allowing people from all over the world to engage and understand conversations during live events, video calls, and lectures.

TalkLocal is a prototype solution, available as a Python library, and handles the majority of the tasks involved in delivering a solution capable of handling transcription, translation, and subtitle creation in real-time. It simplifies the process of transcription, multilingual translation, and multi-format subtitle creation, all inside a Python environment. You can obtain the original transcript and translated output in textual format as well, by simply modifying the configuration settings. These files can be saved for later use, incorporated into other business applications, or utilized for generative AI (artificial intelligence) tasks such as summarizing.

Using broadcasting software or tools like Streamlabs Desktop the library makes it easy to display subtitles or captions while streaming to well-known platforms like YouTube, X (Twitter), Instagram, twitch, and so on. TalkLocal is built using Amazon Transcribe and Amazon Translate which allows real-time transcription, and translation service. Amazon Transcribe is an automatic speech recognition service that uses machine learning models to convert audio to text. Amazon Translate is a neural machine translation service for translating text to and from English across a breadth of supported languages. Powered by deep-learning technologies, Amazon Translate delivers fast, high-quality, and affordable language translation.

To get started, you can either download the distribution or wheel file from here, or git clone the complete solution from github. To use the library, you will need access to Amazon Transcribe and Amazon Translate, as it leverages both the services in the background.

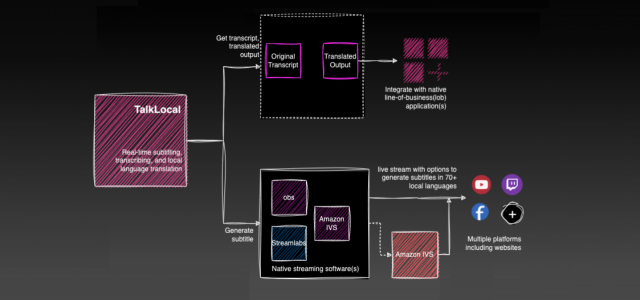

Flow overview

A straightforward three-step procedure for using TalkLocal is shown in the below diagram: install, integrate, and go live.

Step 1, install the TalkLocal library by typing the following command into a terminal window or command prompt (Mac or Windows) —

pip install talklocal-1.0.0-py3-none-any.whl

Note for the Flask application to run, you will need to additionally install Flask and SocketIO —

pip install Flask flask-socketio

Step 2, is to integrate the library with your software of choice to begin streaming. You may use open-source streaming software like obs, streamlabs desktop, or AWS services like Amazon Interactive Video Service(IVS) to stream live to the platform(s) of your choice. Streaming to multiple platforms at the same time is also possible.

For ease of use a sample project is included in the github. The hierarchical structure of the sample Python project structure is shown below:

sample-application/ ├── output/ ├── subtitle/ ├── static/ ├── app.py └── server.py

Sample-Application/: is the root directory of the project.

- output/: Directory (folder) to store the original transcript, translated output or both the textual(.txt) files depending on your choice. It is optional but will be needed if you decide to get the original transcript, translated output, or both.

- subtitle/: Directory containing the subtitle file, .vtt, or the .srt file based on your input. You can either choose to get an SRT or a VTT output.

- static/: Directory housing static subtitle_viewer.html file. The HTML file contains JavaScript(JS) to refresh the subtitles in certain time intervals.

- app.py: Python file for the application logic. You will need to run it to begin the transcription and translation process.

- server.py: Python script as the main entry point of the sample application. Since Flask is used to render the subtitles in an HTML page for demonstration, the sample code is included. Flask is a lightweight and versatile web framework for building web applications in Python. It is known for its simplicity, flexibility, and ease of use, making it a popular choice for developers. If you decide to use the Flask application as demonstrated, you will need to install it. Additionally, you may decide to use any other tool, or framework of your choice to display the subtitles in a browser in a periodic manner. Depending on the option available in the streaming program you are using, you can also choose to display the subtitle and consume the.VTT or.SRT file directly in real-time.

Starting with the app.py file, let’s dissect the user_input object step-by-step for ease of understanding. The ‘UserInput’ serves as a model for configuring source language, target language, subtitle format, and additional settings. It leverages Enum classes to define specific language codes and subtitle formats, providing a structured approach for language selection and input preferences.

# Define user input parameters user_input = talklocal.models.UserInput( source_language=”en-US”, target_language=”es”, subtitle_format=”vtt”, region=”us-east-1", output_format=OutputFormat.TRANSLATED_TEXT )

- source_language: Specifies the source language for transcription (in this case, English with code en-US).

- target_language: Defines the target language for translation. In the example, it is Spanish with the language code ‘es’. There are multiple target languages that can be selected. Each language is associated with a specific code, facilitating easy selection and assignment. For a list of supported languages, or language codes refer to Amazon Translate documentation

- subtitle_format: Specifies the output subtitle format e.g., WebVTT – “vtt” or SubRip Subtitle – “srt” format. WebVTT stands for Web Video Text Tracks, which is a format used for displaying timed text tracks (such as subtitles or captions) in HTML5 videos on the web. It is a text-based file format commonly used for providing subtitles or captions alongside video content, enabling users to read or understand the spoken content in videos. Whereas, SRT stands for SubRip Subtitle, which is also a popular subtitle file format used to display timed text along with video content. SRT files contain textual information synchronized with specific time-codes, allowing subtitles or captions to appear at precise moments during video playback.

- region(Optional): Specifies the AWS region where the service will be executed (e.g., “us-east-1”). It is an optional parameter. By default, it utilizes ‘us-east-1’ if no input is provided. You can refer to the link to get the list of service endpoints and regions.

- output_format(Optional): Defines the desired output format (e.g. original transcript, translated text, or both). If you are looking to get the original transcript or the translated text or ‘both’ in textual format, you can specify it using this parameter. It helps to specify different output formats for processed text, such as transcript text, translated text, or a combination of both. By default. additional transcript or translated output are not generated if you do not specify it.

You must execute the below command to start the library. If you prefer using an IDE such as PyCharm, you can create distinct configurations to run both files concurrently through the tool, enabling you to see the real-time generation of subtitles —

python app.py python server.py

If the setup is done correctly, you’ll begin to observe the real-time generation and continuous updates of the subtitle file while speaking through your computer’s microphone. The generated subtitle file will be visible under the subtitle directory. Based on your input for the entered output_format parameter, you may also see additional files under the output directory. At this point, you have the option to open http://localhost:5001/static/subtitle_viewer.html in any local browser. Doing so will enable you to witness live subtitles being displayed as you speak in your preferred target language as well.

You are all set to integrate the subtitle into your livestream now. To do that, you can move to the next section.

Integration with Streamlabs

Streamlabs Desktop is a live streaming software that comes built-in with everything you need to stream to various platforms. It is designed to capture your webcam, games, desktop, music, and microphone all in one location, with optimal video and audio quality. Custom scenes can be made for BRB, stream termination, and other purposes. You can learn more about Streamlabs Desktop by visiting their website.

Let us now see how to broadcast using Streamlabs Desktop to YouTube with subtitles generated via TalkLocal. You can use a similar process to broadcast with subtitles to other platforms as well. To begin, open the Streamlabs Desktop as shown below.

By selecting the plus(+) icon next to the Scene widget and entering a suitable name, you can add a “Scene.” We have added a new scene, which we have named “YouTube Stream,” as the below diagram illustrates.

Once you have a new scene defined, you can add sources by clicking on the plus (+) sign next to it. Sources refer to the various elements or components you can add to your live-streaming scene. These sources are visual or audio elements that contribute to the overall content displayed to your audience during a live stream.

Some common sources at the time of writing this blog post include:

- Video Capture Device: Allows you to add a webcam or other video capture device to your scene.

- Display Capture: Shows your entire computer screen or a specific window/application.

- Image: Adds an image file to your scene (e.g., logos, overlays, backgrounds).

- Text: Enables you to add text to your scene (e.g., labels, scrolling text, alerts).

- Media Source: Adds videos or audio files to your stream.

- Browser: Allows you to display web content such as a website or web-based widgets in your stream.

- Alert Box: Generates on-screen alerts for events like new subscribers, donations, or followers.

These sources can be customized, resized, moved, and layered within the scene to create a visually appealing and engaging live stream for the audience. Streamlabs Desktop provides an intuitive interface for managing and arranging these sources to create professional-looking live content.

As shown below, we are adding Video Capture Device, Browser Source, and Window Capture as sources to show a live presentation on Amazon Transcribe. We will be live streaming to YouTube using the Streamlabs Desktop application. To learn how to do it, you can watch the tutorial here. To learn more about live streaming via youtube you can read the documentation.

To display a live presentation, you can add Video Capture Device, Browser Source, and Window Capture as sources, as indicated below. You can view the guide here to find out how to accomplish it. You may also read the documentation to find out more about live broadcasting on YouTube.

Webcams and other video capture devices can be enabled for your scene using the Video Capture Device for the live stream. We are using preset 1280X720 as the default and adding the built-in Facetime camera from the MacBook as shown below.

You already have the front-end application using Flask to display the subtitle in a browser using HTML. You will capture the subtitle from the browser while streaming.

You can add a PowerPoint deck for your presentation or based on whatever you are planning to present, you can select Windows Capture as a source and choose it, as seen in the screenshot below.

For a stepwise guide on how to go live on YouTube, you can refer to the official guide here. Assuming you have followed the steps and have successfully authenticated, connected to the YouTube account, and arranged your Scene appropriately.

After you have set up your stream, added your sources, and captured your audio, you can press the Go Live button. You can stream to various platforms like Facebook, Twitch, etc. with subtitles generated in over 70 local languages. Visit Streamlabs help center for additional guidance.

Step 3, is to go live by pressing the Go Live button. Once you press it, your broadcast will begin on YouTube with the subtitles displayed in Spanish. You can now present in English and your Spanish viewers will have no problem understanding it in their local language.

Similarly, you may set up streaming with other services like Amazon Interactive Video Service, AWS Media Services, or other 3rd party products or services.

Step 3, is to go live by pressing the Go Live button. Once you press it, your broadcast will begin on YouTube with the subtitles displayed in Spanish. You can now present in English and your Spanish viewers will have no problem understanding it in their local language.

Similarly, you may set up streaming with other services like Amazon Interactive Video Service or other 3rd party products or services.

Conclusion

In summary, we’ve demonstrated the seamless integration of TalkLocal for real-time subtitle generation. This functionality can be effortlessly incorporated into various business applications, and streaming platforms, or utilized for storing transcripts and translations in databases as immediate references. While acknowledging the existing challenges, it’s evident that the potential for a globally connected and inclusive world is well within our grasp through the power of artificial intelligence and machine learning. I trust that this innovation sets the stage for a future where language ceases to be a barrier, fostering greater communication and understanding across diverse communities.

Thanks for reading.

Written by Wrick Talukdar

Sr. Machine Learning/Artificial Intelligence Architect @AWS | Tech Entrepreneur | Thought Leader | Generative AI / ML Product(s)

While Wrick is an AWS employee, opinions and statements on this blog are his own.

You can follow me at https://www.linkedin.com/in/wrick-talukdar/